Hugging Face: Truly “Open” AI

The race between open and closed AI is heating up but what does Hugging Face offer to this?

Hey everyone 👋 — I’m Avirath. I learn a lot from analysing new products, thinking about new product strategies and my opinion on the markets/products for the future. I wanted to share this knowledge with my readers - that’s the motive behind this newsletter.

This article covers a breakdown of Hugging Face, the opportunities it has on its hands and the challenges it faces in scaling.

Grateful to Aarush for helping edit it.

Read till the end for a surprise!

Join the fun on Twitter.

Actionable Insights

If you only have a few minutes to spare, here’s what you should know about Hugging Face:

The Democratization of AI: Hugging Face (HF) is crucial in making advanced AI technologies accessible to a broader audience. Understanding and leveraging its platform can be a game-changer for developers and businesses looking to integrate AI into their products and services.

Comprehensive Product Suite: Hugging Face’s Transformers, Diffusers Libraries, Spaces, Inference API, and Autotrain collectively offer a powerful, versatile toolkit for AI and ML development. Leveraging these tools can greatly enhance a developer’s or business’s capacity to implement, innovate, and showcase AI solutions.

Bridging Science and Engineering in AI: Hugging Face plays a crucial role in making complex AI research accessible and implementable. It breaks down advanced AI models into user-friendly tools and open-source code, ensuring that developers can stay at the forefront of AI without extensive in-house research.

GPU-as-a-Service: Reshaping AI Infrastructure: HF’s GPU-as-a-service offers a strategic advantage in managing computational resources. This service is vital for businesses facing hardware limitations, providing an economical solution to scale AI operations without investing in expensive infrastructure.

Navigating the Commercialisation of AI: HF’s open-source model faces challenges from proprietary AI models by giants like OpenAI and Google. HF may need to develop proprietary models or form exclusive partnerships to stay competitive, providing a unique blend of open-source accessibility and cutting-edge AI capabilities.

Competition from AI Insurgents and Cloud Giants: HF competes with a range of AI platforms, from well-capitalized insurgents to cloud service giants like AWS, Google, and Azure. Its community-driven model, however, gives it a unique advantage in fostering a collaborative ecosystem. HF’s multi-cloud platform approach also offers flexibility and security to its clients, enhancing its market position.

Monetisation Strategy and Its Implications: HF’s approach to monetization, including potential subscription-based access to exclusive tools or proprietary models, will significantly impact its service offerings.

Linus Torvalds needed a free version control system. In 2005, the creator of Linux had their relationship between Linux and BitKeeper, a proprietary version control system, broken with BitKeeper revoking their free-of-charge status.

Years later, in an interview in 2021, Linus commented on how commercial and monetary licenses make it “really hard to build a community around that kind of situation, because the open source side always knows it’s ‘second class’.” He further remarked on how having a common project motivated people to feel like a full partner towards a common vision democratising access to the masses.

Back in 2005, it was probably a similar state of mind that spurred him to develop Git, an open sourced version control system. Git was conceived to be more than just a tool; it was an embodiment of the belief that collaborative, open-source projects could lead to more robust, innovative and democratised solutions.

If Git was the origin of a free control system, Github marketed it to the developer community and beyond. Github made it easier for developers to collaborate on projects, share code, and track changes in a more user-friendly interface than Git itself offered. It democratised access and search to the world’s code by hosting over 372 million repositories, including at least 28 million public repositories supporting over 4 million organisations.

Glance at Hugging Face and its parallels with the world’s largest home to code are partially obvious. Much like GitHub, Hugging Face emerged as a pivotal platform, but in the realm of artificial intelligence and machine learning. Founded in 2016 by Clément Delangue, Thomas Wolf and Julien Chaumond, Hugging Face initially gained recognition for its friendly, conversational AI model. However, it rapidly evolved into something far more significant.

The company has positioned itself as a central hub for the AI and machine learning community. It hosts a wide array of state-of-the-art models, from natural language processing to computer vision, making these cutting-edge technologies available to a broader audience. By fostering a community where developers and researchers can easily share, iterate and collaborate on AI models, Hugging Face has created an ecosystem reminiscent of GitHub's repository network, but for AI.

It supports lauded PhD experts and budding tinkerers, giants like Meta AI, Google DeepMind, OpenAI, Stability AI and many more. Keeping in mind the future, it is probably undervalued. On first glance, it’s emoji-like mascot gives off a naive vibe; dig deeper and one understands that it’s flywheel powers countless important AI products. Over time it’s grown to such importance that all new research and applications make it a pre-requisite to launch on Hugging Face. That is quite laudable for just an 8 year old company considering the research community likes to stick to traditional software services.

In today’s piece, we’ll explore the intricacies of Hugging Face and what the future holds in terms of possible iterations. In doing so, we’ll chronicle Hugging Face’s origins, dissect its accelerating flywheels, highlight critical risks, and apply our perspective.

Origins

Thomas Wolf couldn’t take a moment’s rest.

He’d spent the entire weekend porting Google’s then newly released BERT model from Tensorflow to PyTorch. Little did he and his co-founders(Clément Delangue and Julien Chaumond) know that this was one of those moments that would change the trajectory of what they’d been working on for over two years.

Clement Delangue and Julien Chaumond had met online in 2016 when they were both coincidentally working on a collaborative note-taking app that didn’t really show any promise. A while later, they met Thomas Wolf, a college friend of Chaumond’s. All of them had had pretty valuable at successful stints prior to coming together.

Clement was a software engineer at Moodstocks, a startup that that built the technology behind Google Lens. Julien Chaumond held degrees from Stanford and Ecole Polytechnique while Wolf had a doctorate in physics and had authored papers in ML. The trinity of these smart minds promised something exciting entrepreneurially.

Sure enough, after deliberation and discussion, they decided to build an interactive AI chatbot targeted at teenagers - almost an AI tamagotchi x ChatGPT app. They poured their energy into a concept for nearly 3 years, securing pre-seed and seed investments. Users embraced the idea, engaging in billions of messages.

Startup success is rarely so simple, though. While Delaunge, Wolf and Chaumond had set out to build this cool personal bot, finding product-market fit proved challenging.

However, obsession often finds a way to win through. Wolf spending the weekend porting BERT from Tensorflow to PyTorch, hosting it on Github and tweeting about it proved to be more than just a fateful event.

Within hours the tweet started buzzing with over a 1000+ likes. The trio were left astounded. On exploring a little bit more and posting a few more models on their Github, a lightbulb struck them.

AI and the deep learning revolution were too big a space to discover individual repositories and models on GitHub. One of the things GitHub has been poor with iterating on in its lifetime has been curation, discovery and education. AI needed all three.

Enter, Hugging Face as we know it today - a digital alchemist in this AI era where developers and researchers can find, use, and share advanced AI models easily.

Products

The Power of Transformers: In 2017, a transformative technology emerged in the form of ‘transformers’, courtesy of Google and the University of Toronto. These foundation models revolutionized language analysis by evaluating the relationship and importance of words in a sentence, outperforming traditional sequential NLP models in speed and efficiency. Their design is tailored to leverage GPUs, making them ideal for NLP training.

While tech giants like Google and OpenAI swiftly adopted transformers to create large language models (LLMs) like BERT and GPT-3, the steep cost and resource requirements posed challenges for many. Addressing this gap, Hugging Face launched its transformers library, a beacon for developers and organizations. This open-source repository houses an array of popular language(now expanded to multimodal) models, accessible via an API, allowing developers to fine-tune these models for tailored applications.

With Transformers as the fastest growing open-source project, Hugging Face is home to 10,000 organisations users with great loyalty and activeness. Hugging Face has attracted more than 1,440 contributors, with 75,000 stars and 16,400 forks, and an average of over 50,000 users download models from Hugging Face each day.

Diffusers Library: Launched as a response to the evolving needs in AI, the Diffusers library specializes in diffusion-based generative models, a technology that’s rapidly gaining prominence for its ability to produce high-fidelity, creative outputs. The Diffusers library complements Hugging Face’s existing offerings, like the Transformers library, by providing new capabilities in the generative AI space.

Spaces: A Developer’s Playground: Hugging Face goes beyond model access with Spaces, a platform for developers to craft, host, and showcase their NLP, computer vision, and audio models. Spaces offers interactive demos and a git-based workflow, making it an ideal portfolio-building tool for developers. As of September 2023, it hosts over 7,500 models, including noteworthy ones like DALLE-E mini and Stable Diffusion, and imposes no limits on the number of hosted models.

Inference API: Integration Made Easy: For organizations looking to seamlessly integrate ML models, Hugging Face’s Inference API is the key. It not only hosts thousands of models but also supports the construction of large-scale models, catering to enterprise-level needs with the capacity for up to 1000 API requests per second.

Autotrain: AI Simplified: Lastly, Hugging Face’s Autotrain simplifies the AI model training process. Users can upload their data, and Autotrain automatically identifies the best-suited model, handles the training, evaluation, and deployment at scale, encapsulating the essence of AI democratization.

Key Opportunities and Features

Personally, as a student, one of the fine distinctions I’ve seen between a good professor and a great professor is that those who belong to the latter category along with being very learned in their fields can translate their knowledge in a way that students of all cadres understand specific concepts. Hugging Face has taken up a similar vital role in the evolving AI industry as it bridges the gap between science and engineering.

Bridging gap between Science and Engineering

Complex white papers are hard to understand and implement. Implementations from research papers require heavy technical, mathematical and programming expertise. Hugging Face breaks papers and projects down into easily understandable documentation, provides one-call inference APIs to the most complex AI models and open-sources code(directly and indirectly).

The rapid rise of generative AI in the last two years has caused the rise of two factions:

Researchers, deep learning experts and PhD experts who implement projects from the model level and updates are made by updating the model. Such companies include Midjourney whose value prop comes from updating their models.

The other faction includes engineers and product aficionados building on top of open APIs where innovations and improvements are being made in different verticals to supercharge them with AI.

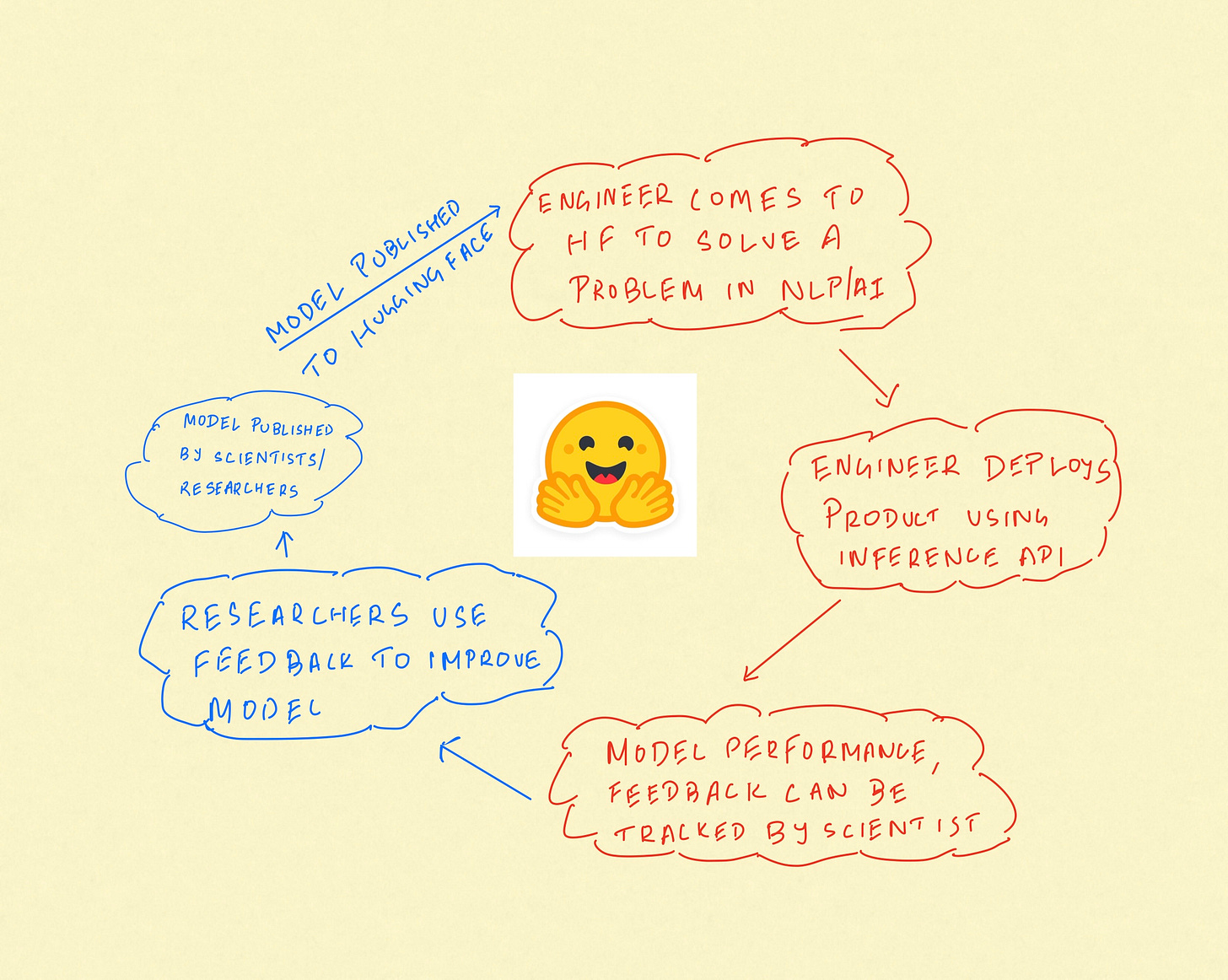

Hugging Face makes sure that a gap that does not open up between those who develop models and those who develop the world with such models. The ways it does this are -

Hugging Face's open-source model library boasts over 100,000+ models with a testing GUI for most from leading companies like Meta, Stability AI, Runway ML and more. Along with more than 10,000+ datasets, it forms the world's most extensive collection of resources for ML models, particularly for NLP models. One of the most revolutionary things they’ve done is open up a plethora of applications under their own Transformers library ranging from basic NLP tasks to the most complex of generation tasks.

Second, Hugging Face removes friction for engineers to deploy and operationalize ML models. Instead of sifting through detailed whitepapers to find the ideal model, Hugging Face offers tailored recommendations based on the specific task at hand. It’s a great way to discover ergonomic models that can perform functionality at the level of bigger and expensive ones. Along with this, it provides the ability to host user-made models and projects at scale with provisions for GPU as a service on the backend with just a couple of clicks. This helps them in creating an ecosystem of a kind.

Lastly, and very importantly, open-source always creates community around a service or a product. By democratising a lot of the new-age AI code, HF is not only able to leverage marginal improvements and squash bugs faster, but have application engineers iterate on the latest cutting edge models released by scientists/researchers. This helps the latter gain important feedback to improve future versions of the model creating a positive feedback loop.

Another point of view that can make Hugging Face strong in the future is that they can compile fine tuning data provided by various users to improve models in the long run. For example, if one were to fine-tune a GPT-3 model with a prior accuracy of 90% on classifying spam/non-spam email with a few thousand examples, it would increase the model’s accuracy for that instance and user to about 90.5%. However, if the entire community contributed to openly improving the weights of a model, then those few thousand examples from a few hundred contributors would really have an impact on middling performance models.

GPUs as a Service

The growth of generative AI models has definitely created a paradigm shift in technology but it’s come with a price - a prerequisite of powerful GPU hardware and services on the backend. 2023 saw a global GPU shortage where OpenAI reportedly delayed certain offerings and even paused ChatGPT subscriptions due to the same. Sam Altman even joked to the Senate that he wished people used ChatGPT less with OpenAI suffering from disrupted inference due to GPU shortage. This conflicts with the rapid progress AI model and product engineering companies want to make.

In the intricate dance of supply and demand, Hugging Face is using its GPU-as-a-service model as an operational advantage, creating a robust flywheel effect to reshape the AI landscape.

Efficient GPU Utilization: At the core of this strategy is Hugging Face’s adept management of GPU resources. By cleverly provisioning the same pool of GPUs across a diverse user base, aligned with varying usage demands, they've achieved remarkably low downtimes. This isn't just efficient resource management; it’s a shift in how computational power is distributed and consumed in the AI field.

Economies of Scale in Action: This strategic allocation allows Hugging Face to continually invest in expanding their GPU arsenal. As their computational resources grow, they can cater to an even wider audience, scaling up operations while maintaining, or even reducing, costs. This scalability isn't just about growth; it’s about fortifying their position in the market, creating a moat that competitors find challenging to breach.

Undercutting the Competition: With an ever-expanding repository of GPUs at their disposal, Hugging Face is well-positioned to offer more competitive pricing than their rivals. This isn't merely a race to the bottom in terms of cost; it's about delivering unparalleled value, making top-tier computational resources accessible to a broader spectrum of users, from solo developers to large enterprises. Compared to Replicate and Paperspace, HF’s inference endpoints are about a dollar/hour more expensive than Replicate for A100s while Spaces are a dollar cheaper to run on A100s but lack API calling ability.

Creating a Virtuous Cycle: This approach has set in motion a virtuous cycle: efficient GPU utilization leads to cost savings, which fuels further investment in resources, attracting more users and driving down costs further. This flywheel effect is propelling Hugging Face to new heights, enabling them to not just participate in the AI revolution, but to actively shape its trajectory.

Expansion to other Services

When individuals and businesses use Hugging Face to create AI-driven innovations, the resulting value is not easily harnessed by the company. This value tends to be captured either by the developers of the foundational libraries that Hugging Face utilizes, or by the end-users who employ Hugging Face’s resources to develop their own products. To capture more value here are a few services it can expand to:

While Google Colab is a great way to prototype and test code for ML/AI projects, HF could definitely create a better notebook environment where one could use the expansive set of models they open source with the tons of guides they publish.

Streamlit is one of the leading frameworks in the Python developer community to develop MVP stage AI apps. It provides users with several reusable components but lacks heavily in terms of caching, memory storage or even deployment to top cloud providers. While you can host a Streamlit app on Hugging Face’s Spaces, HF can partner with Streamlit to host apps with its cloud partners. While Hugging Face does have a similar framework called Gradio that powers the interface development, it’s compiler-friendly which makes it difficult to rapidly iterate on changes and is very limited on the components that can be used. Resources spent on development of Gradio could be another viable option!

Key risks

Commercialisation of AI

In the vast tussle among leaders in AI, Hugging Face confronts a formidable challenge posed by giants like OpenAI and Google(through Gemini). These entities, once champions of open-source ethos, are gradually pivoting towards more closed, proprietary models that deliver exceptional performance. This shift represents a potential threat to Hugging Face's open-source-driven ecosystem.

As companies like OpenAI transition to offering exclusive APIs and multimodal AI applications, they potentially draw users away from open-source platforms. The commercialization of these high-performing models could create an environment where Hugging Face's open-access model faces stiff competition. The largest challenge for Hugging Face will be if ultimately a handful of models (like GPT) begin to win full share

Hugging Face, known for its vast repository of open-source models, might find itself at a crossroads. The growing trend of proprietary AI solutions challenges the platform to evolve, potentially reconsidering its open-source-centric approach to remain competitive.

To counter this emerging threat, Hugging Face might need to delve into developing its own proprietary models. These models would need to either match or compete closely with the likes of Facebook's LLaMA models and others. The more segmented this market is, the better it becomes for Hugging Face. Investing in such in-house developments could be pivotal in retaining its user base and attracting new users seeking cutting-edge AI solutions. Ease of use in chat interfaces and developer tools competitive to Bard, GPT and their APIs will be essential in staying competitive!

Heavy Competition

Hugging Face faces competition from fellow insurgents, platforms, and incumbents.

HF will want to pay closest attention to other well-capitalized insurgents with designs on AI open source or model domination. This landscape can be marked as a very interesting analogous Game of Thrones with a House H2O.ai, House OpenAI, House Gensim, House Allen NLP and several other budding competitors. Among these, H2O.ai emerges as a formidable rival. While Hugging Face thrives on a community-driven model, fostering a collaborative ecosystem for data scientists and engineers, H2O.ai caters to a different market. It targets corporate sectors like finance, healthcare, and retail with its autoML platform, having garnered a client base of over 18,000 organizations. This contrast in business models – Hugging Face’s community orientation versus H2O.ai’s corporate focus – delineates the competitive landscape.

However, Hugging Face’s community-centric approach could be its ace in the hole. The platform’s thriving community not only contributes to its rich repository of models but also creates a network effect that could be a significant advantage over competitors like H2O.ai. For instance, Hugging Face’s ‘datasets’ repository outshines H2O.ai’s ‘h20-3’ in popularity and engagement, indicating a more active and passionate user base.

Yet, the biggest competitive pressure for Hugging Face may come from cloud service giants – AWS, Google, and Azure. AWS’s SageMaker offers comprehensive ML solutions, Google leads in ML research, and Azure, with its $10 billion investment in OpenAI and exclusive GPT licensing, is rapidly expanding its ML offerings. Despite these challenges, Hugging Face has also cleverly positioned itself through strategic partnerships, like the one with SageMaker. This collaboration allows users to train models on Hugging Face while keeping their data on AWS, balancing cooperation with competition. The win-win case here is Hugging Face’s models being used with Hugging Face front end packaged cloud services from Microsoft, Google and Amazon where the multitude of models that Hugging Face offers can be deployed at scale.

One of Hugging Face’s strongest advantages, akin to Snowflake’s strategy, is its multi-cloud platform approach. In an era where businesses seek to mitigate risks by not relying on a single cloud service provider, Hugging Face’s ability to operate across various cloud platforms positions it uniquely in the market. This strategy not only enhances business security and stability for clients but also positions Hugging Face as a versatile and adaptable player in the AI domain.

Monetisation

While Hugging Face has worked so hard on creating a community, the ultimate goal of a startup is to monetise and make money. Clement Delangue has mentioned how he believes usage to be deferred revenue. However, its monetization strategy encounters unique challenges due to the nature of its value creation. While Hugging Face significantly eases the process for a multitude of individuals and companies to develop valuable AI-driven solutions, capturing the value generated becomes complex. The value, rather dispersed, tends to be absorbed either upstream (by creators of the original libraries upon which Hugging Face builds) or downstream (by users who leverage Hugging Face’s tools, libraries, and models to create end products). Businesses are slowly signing up with Hugging Face but it’ll be essential to monetise through users and direct customers too. Since they sit at the midpoint of this chain, finding a balance where they can monetize without disrupting the open-source ethos that forms their core becomes a delicate endeavor.

One of the possible monetisation strategies like previously mentioned is to lease cloud services from the Big Three to combine with HF’s huge library of models and charge commission fees or distribution fees from cloud providers to increase their visibility. Another option may be to innovate their business model to include subscription-based access to exclusive tools such as numerous Spaces or tiered services with limited access to exclusive platform-only or proprietary models(this would require HF to make their own models or exclusively lease them), offering a balance between open-source accessibility and revenue generation.

Conclusion

As we look to the future of Hugging Face, it’s clear that they stand at a crucial juncture in the AI landscape. From the transformative impact of its Transformers and Diffusers libraries to the collaborative haven of Spaces, Hugging Face has not only democratized access to cutting-edge AI technology but has also fostered a thriving community of innovators and creators.

The challenges, however, are as real as the opportunities. The recent privatisation of cutting-edge models doesn’t just pose a threat to Hugging Face but to the open-source ecosystem as a whole. Hugging Face should invest in development of its own models or forming exclusive partnerships with other core AI companies. At the same time, monetisation of their open-source efforts through creative partnerships is essential too.

As we watch Hugging Face adapt, evolve, and innovate, one thing is certain – its role in shaping the future of AI is undeniable. The journey of Hugging Face is not just about a platform; it’s about the evolution of an ecosystem where technology meets creativity, collaboration fuels innovation, and the future of AI is for everyone to shape and share.

If you have any feedback or suggestion, do loop it in the comments section. I’ll be sure to take a look at it and even reach out to discuss if possible. You can reach me at avirathtibrewala@gmail.com for any other queries or discussions.

As a part of my newsletter, I wanted to affect some moral good to the society we live in. Every time I publish a post, I’ve decided to share an artefact or excerpt on kindness and benevolence.

This week, read this amazing piece on forgiveness and compassion from Erik Torenberg.

Really well written and informative. It's even more impressive that Hugging Face is able to have this many products and success with a relatively low headcount of less than 300.