Hey everyone 👋 — I’m Avirath. I learn a lot from analysing new products, thinking about new product strategies and my opinion on the markets/products for the future. I wanted to share this knowledge with my readers - that’s the motive behind this newsletter.

This article covers a primer on AI agents and the how agents can be used to give utility to consumers.

Read till the end for a surprise!

Join the fun on Twitter.

Actionable Insights

If you’re short on time, here’s what you should know about the evolution of technology and AI agents:

Technological Revolutions and Web 3.0: The internet has undergone significant transformations, from static webpages to interactive marketplaces, and now to Web 3.0. This latest era emphasizes decentralization and digital ownership, empowering individuals through digital wallets, cryptocurrencies, and decentralized systems.

AI Evolution: AI has also evolved through distinct phases. Initially, it relied on statistical methods for simple tasks. The second era saw the rise of deep learning, enabling complex applications in image and speech recognition and natural language processing. Now, we are entering an era of general intelligence where AI capabilities are more accessible to a broader audience through open-source software and APIs from companies like OpenAI, Anthropic, and Google.

The Rise of AI Agents: The next leap in AI is the development of AI agents. These agents can autonomously perform tasks, interact with users, and adapt to new information without continuous human intervention. They are poised to revolutionize various fields by managing complex, multi-step processes and improving efficiency in customer service, research, and conversational commerce.

Challenges in Consumer AI Devices: While consumer-focused AI devices like Humane’s AI Pin and Rabbit’s R1 aim to perform tasks autonomously, they face significant challenges. Competing with integrated smartphone features and convincing users to adopt a secondary device remains a hurdle. Prototyping within existing platforms like customer service and workflow tools offers a more practical entry point.

Potential of AI in Customer Service: Companies like Freshworks and Intercom have integrated AI into their customer support platforms, enhancing efficiency. However, current AI functions more as an assistant providing information rather than autonomously completing tasks. Transitioning to AI agents capable of handling entire processes, such as completing transactions or managing orders, represents a significant opportunity.

Zapier and Workflow Automation: Zapier exemplifies the potential of AI in automating workflows. By integrating natural language processing, Zapier can simplify the creation of automated workflows. However, the current AI capabilities assist with parts of the process rather than handling entire workflows autonomously. Developing agents that can orchestrate multiple tools and products within unified workflows is the next step.

Integrations and Interoperability: For AI agents to be effective, they need robust integrations with external tools and data sources. Companies like Zapier, with their extensive network of integrations, are well-positioned to lead this development. Interoperability among specialized agents focusing on different tasks can enhance flexibility and efficiency.

Addressing AI Hallucinations: AI systems can produce outputs that seem plausible but are incorrect. Ensuring accuracy and reliability is crucial, especially for agents performing complex tasks. Developing evaluation systems that allow agents to backtrack and correct errors, along with incorporating human feedback, will be vital to advancing AI agents.

Visual Language Models (VLMs): GUI-based interactions present challenges for purely language-based agents. VLMs offer a solution by processing visual signals from GUIs, providing a richer understanding of content. This capability is essential for AI agents to interact effectively with modern user interfaces.

Realistic Development Timeline: The integration of AI assistants in existing SaaS and consumer features is the most immediate opportunity. Following this, unsupervised workflow automation represents the next step, requiring advancements in reliability and cost optimization. Complex workflows will build on these foundational capabilities.

Every technology undergoes multiple revolutions, each a transformative leap forward. The internet's journey began with static webpages, mere silos of information. These early sites were largely read-only, offering limited interactivity and serving as digital pamphlets rather than dynamic platforms. The second wave introduced interactivity, where users could click buttons, toggle settings, and make online payments, ushering in the marketplace era. Websites became transactional hubs, enabling e-commerce and real-time user engagement, fundamentally altering how we shop, communicate, and access services.

Now, in the epoch of Web 3.0, we've embraced digital wallets, cryptocurrencies, and decentralization—innovations that shift control and ownership from centralized entities to individual users, empowering them to own a piece of the digital landscape - a big nod to the democratisation of

AI and machine learning have experienced a similar evolution. The first era of AI was rooted in statistical methods, where simple regression models and classification systems tackled straightforward tasks like sales predictions. This was the domain of pure statistical machine learning, grounded in linear algebra and probability theory. Early AI systems were limited in scope and often confined to well-defined problems with clear parameters.

The second era brought forth the age of deep learning, a time when neural networks began to mimic the complexities of the human brain. AI applications became more sophisticated, finding their place in Netflix's recommendation engine and tackling intricate scientific problems, leveraging vast datasets and computational prowess. This era saw the rise of powerful algorithms capable of image and speech recognition, natural language processing, and autonomous systems, driving significant advancements in technology and industry. However, the most advanced systems were limited to those to organisations and individuals with a lot of money and compute available.

Now, we've entered the third era—an era of general intelligence. This phase is akin to the decentralization of Web 3.0, where the power of AI is no longer confined to tech giants and specialized industries. Open-source software like Meta’s Llama, hubs like Hugging Face and black box APIs from OpenAI, Anthropic and Google have partially AI, making it accessible across a vast array of fields and to a lot of people who may want to build applications with these previously hard-to-replicate models. Just as Web 3.0 empowers individuals with ownership and control over their digital interactions, the current wave of AI distributes intelligence widely, enabling anyone to harness its capabilities.

The next step in this evolution appears to be the rise of AI agents. These agents represent a significant leap forward in AI applications, designed to autonomously perform tasks, interact with users, and adapt to new information without continuous human intervention. AI agents can handle complex, multi-step processes, from managing customer service inquiries to conducting intricate research, automating routine tasks, and even engaging in conversational commerce. OpenAI and Google’s 2024 events highlight the community’s momentum towards this next phase - a notable demonstration from OpenAI’s post-event feature demonstrated two GPT-4o agents dialoguing to resolve a customer issue dispute, showcasing how agents equipped with memory, access to external/user data, and an internal chain of thought can effectively manage multi-step tasks with minimal human oversight.

At the application layer, there’s been an equally loud but maybe not as promising chatter around agents with consumer focused devices/products like Humane’s AI Pin and Rabbit’s R1 trying to perform tasks like ordering an Uber or food from DoorDash. While they seem like great feats of engineering, these devices seem like short-lived secondary hype trailers to how much smarter the smartphone can get once Apple and Android integrate agent capabilities into their assistants.

To be fair to both Humane and Rabbit, budding developers and companies wanting to break into the consumer product agent space face a great dilemma:

Make an app on the desktop or mobile that interacts with other parts of the OS, memory store and performs agent like actions - making reservations on Opentable, orders taxis on Uber/Lyft. This leads to the potential risk that iOS, Android, Windows(maybe) wipe these apps out with a future update and win through their monopolistic behaviours in matters relating to apps and app stores.

Make a device that does whatever an app can do in terms of autonomous agents and own their own marketing and visibility strategies but no clear proposition as to why users should buy a second device.

After putting much thought to this, I believe that the best space to prototype and find a market for delivering AI agents to consumers is through innovating within or on top of existing offerings that both

directly use AI in interactions with users or customers like RAG based customer support chat

already connect to several external tools or data stores - think workflow tools

The first companies that came to my mind were customer service and workflow creation software companies. Some of them include Zapier, Intercom and Freshworks.

Freshworks and Intercom have integrated AI into their customer support platforms, significantly enhancing their product offerings and improving efficiency. However, the current role of AI in these platforms is more akin to an assistant providing helpful answers rather than autonomously completing tasks.

Freshworks utilizes Freddy, an AI-powered bot, to automate repetitive tasks, provide instant responses to customer queries, and deliver actionable insights. Freddy’s capabilities extend to predictive analytics, helping businesses anticipate customer needs and tailor their services accordingly. By leveraging machine learning algorithms, Freddy can improve over time, ensuring that customer interactions become increasingly efficient and personalized.

Intercom has developed Fin, an AI bot built on GPT-4, which represents a significant advancement in AI-powered customer support. Fin is designed to handle a wide range of customer inquiries autonomously, learning from each interaction to improve its responses. Intercom’s integration of AI focuses heavily on preventing hallucinations—situations where the AI generates incorrect information—by incorporating mechanisms to express uncertainty and escalate issues to human agents when necessary. This approach ensures that customer support remains accurate and reliable.

However, these AI systems primarily function as advanced assistants. For example, if a customer wants to cancel only a specific part of an order—a somewhat unusual request—the AI assistant can provide detailed instructions on whether and how this can be done. The AI might say, “I think you can cancel part of your order by following these steps: go to your order history, select the item you want to cancel, and choose the cancel option. I found this information in the help center, does this help?” While this guidance is valuable, the customer still needs to perform the steps themselves. The AI assists reactively by providing the necessary information but does not autonomously complete the task.

In an experiment, popular engineer and blogger Gergely Orosz had this to say about Klarna’s GPT/AI powered customer service assistant. Like noted above, these so called AI assistants reduce friction in terms of search time but lack any proactivity in actually finishing the user’s task.

Zapier stands out as a powerful automation tool that connects various apps and services, enabling users to automate workflows without needing to write code. Its core product, the Zapier platform, allows users to create "Zaps," which are automated workflows that link different applications to perform specific tasks. With the incorporation of AI, Zapier can now handle more complex and dynamic workflows.

Zapier’s AI integration includes natural language processing to make creating Zaps easier. Users can describe what they want to achieve in plain language, and Zapier translates this into a series of actions. For instance, a user might say, “Whenever I receive an email from my boss, save the attachment to Dropbox and send me a Slack notification.” Zapier can set up this workflow automatically.

However, similar to customer support AI, Zapier’s AI often assists with parts of the task rather than handling entire workflows autonomously. If a user wants a more complex workflow, such as processing attachments in a specific way before saving them, the AI might provide a series of steps to set up these actions but will still require the user to confirm and configure each part of the process.

A Brief Introduction to Agents

Agents are unique in their goal-oriented nature, distinguishing them from most technology which focuses on task substitution. Instead, agents strive to achieve broader objectives.

Each agent is driven by at least one primary goal, and potentially multiple goals.

To achieve these goals, an agent must: (1) analyze its environment, (2) create a plan and break down the goal into individual tasks, and (3) execute the plan using other agents and digital tools.

Considering the following question?

Who’s regarded as the father of the iPhone and what is the square root of his year of birth?

This is complex question to answer for a language model and requires the model to break the question down into simpler parts while performing knowledge retrieval and a mathematical task to achieve the result. Let’s assume the agent has a search engine and calculator at its disposal.

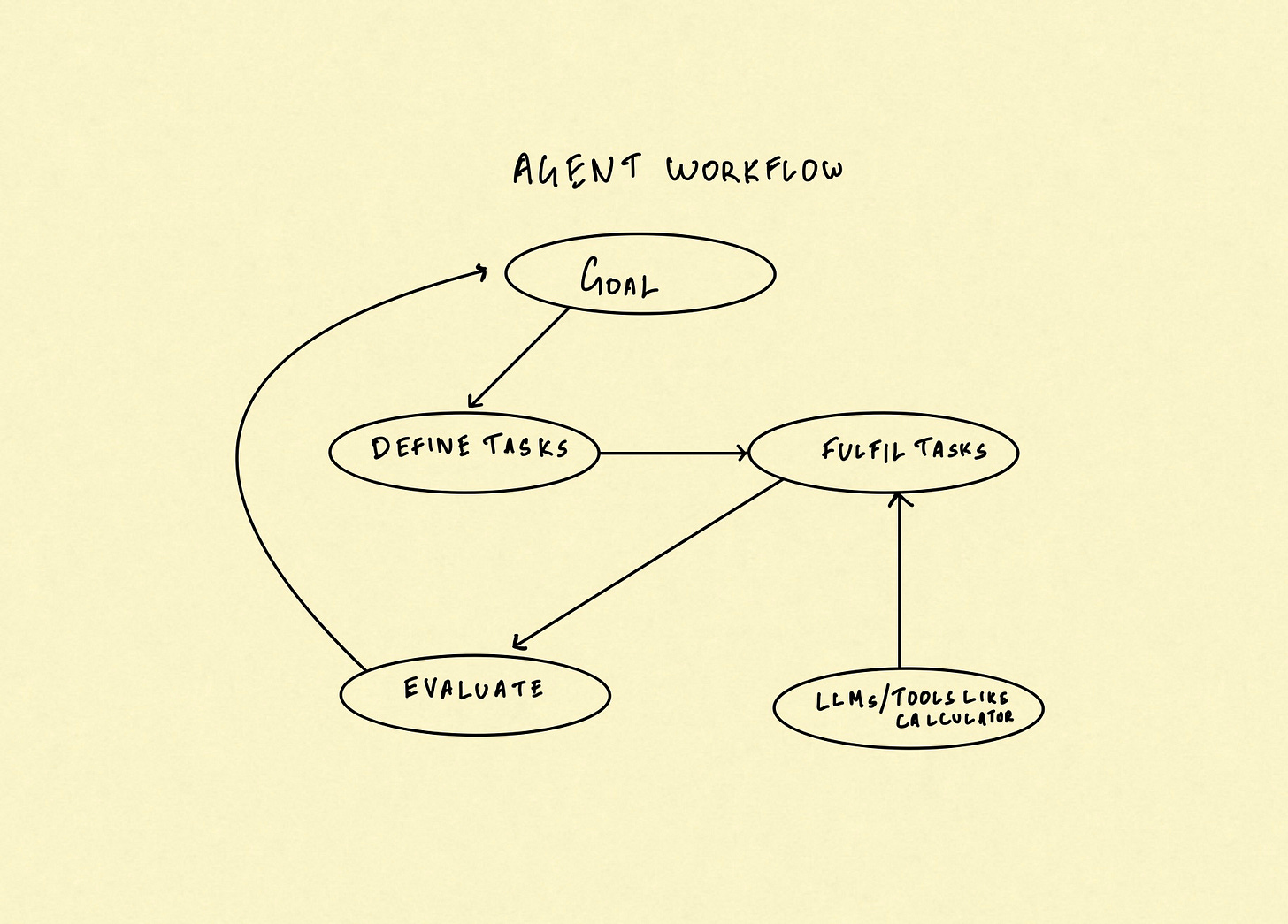

Below is a graphic depiction of the sequence of events of the agent.

A typical agent workflow would look like the following:

Evaluate Resources: The agent assesses its surroundings to determine the available resources, including external APIs.

Strategize and Break Down: It formulates a strategy by breaking the overall objective into smaller, manageable tasks and organizing the sequence in which these tasks should be executed.

Execute the Plan: Utilizing the identified resources, including external APIs/search engines, the agent follows the plan to accomplish the objective.

Key Features

Ecosystem Benefits for Agents

Both Intercom and Zapier can use their position to make AI go from being used as an assistant to chat with, to using AI to accomplish complete tasks that a human might otherwise have to perform. This moves AI from being a "read-only" operation to fundamentally a "read/write" operation.

For Intercom to transition into smarter agent capabilities, they could start by focusing on creating a sophisticated conversational shopping experience. Here’s how such an experience could unfold:

Customer Query: A customer enters a query like, "What are good blue shirts I can wear for a date?"

Data Indexing: The AI agent indexes through the company’s data sources, including product catalogs and customer reviews, to find suitable options.

Showcasing Products: The agent presents a curated list of blue shirts that match the customer’s query, complete with images, descriptions, and customer ratings.

Customer Confirmation: The customer selects a shirt and confirms the purchase.

Processing Payment: The agent calls the relevant API to charge the customer’s card, ensuring a secure and seamless transaction.

Order Posting: The agent posts the order to the warehousing backend, ensuring that the item is picked, packed, and ready for shipping.

Confirmation Email: Finally, the agent sends a confirmation email to the customer, detailing the purchase and providing shipping information.

By integrating with internal data sources and functional APIs, Intercom can transform a simple customer query into a complete transactional journey. This approach not only enhances the shopping experience but also streamlines operations, showcasing a significant leap from AI as an assistant to AI as a full-fledged agent capable of autonomously managing and executing complex tasks.

However, while studying this, I converged to the point that though something like conversational agent shopping experiences can be a massive step for AI agents to make, there are several challenges in this actually translating to reality. Convincing partner merchants to share their private/proprietary data, syncing with each of their individual backend stores and identifying the accurate functional APIs to call - all of this can be pretty expensive and time consuming due to the various specifics each company has.

At the same time, this can present itself as a huge opportunity for players like Toast, Shopify, Hubspot, and others, who’ve successfully established themselves as an ecosystem built on APIs where an organisation that uses these software services can even run their business through the command line. These companies have built extensive, clear documentation and standardized much of their functionality through APIs, making it easier to build agents around them.

To win horizontally and provide for many businesses, we will have to see a greater standardisation to see a AI agents-as-a-service model. To reach a goal where AI handles most of our shopping journeys, we’re probably a long ways away. Simple workflows and part by part vertical integration will most definitely be the first steps the community will have to take to make complex journeys a reality.

Agents and Importance of Integrations

The above discussion underscores the critical importance of external integrations in building effective AI agents. In this regard, Zapier is uniquely positioned, having established a vast network of integrations over the years. The next wave of AI agents that businesses will find valuable and be willing to pay for will focus on the orchestration of multiple tools and products within unified workflows. Consider this example: workflow integration apps are often utilized for compound action tasks. Through my research, I discovered that recruiters frequently connect to ATS/recruiting platforms like Greenhouse or Lever, then parse information from LinkedIn and portfolio websites to generate comprehensive summaries of candidates’ experiences.

To this effect, YCombinator’s latest batches have seen a huge influx of startups trying to create agents that work on orchestration/automation tasks like mentioned above.

A certain commonality to AI agents from such startups is that they all mention using the browser or parsing unstructured/structured web information. Several startups have made starts on using agents to automate web tasks like getting competitor prices or making a job post on LinkedIn. Zapier has already shown its proactivity by adding a browser agent to their existing set of integrations. History has often thought us that distribution is key and being a reliable middleman can be key to Zapier’s growth in the coming years.

An important step for workflow companies like Zapier can take to further the development of agents is interoperability. Like APIs now that perform different functions, agents should specialise in certain parts of the supply chain such as browsing/information retrieval or data analysis. This could unlock a greater flexibility in use of agents rather than building similar workflows for every new goal.

Hallucinations

A key feature of discussion with modern LLM based agents has been “hallucination” behaviours. The term "hallucination" in the context of AI refers to the phenomenon where a large language model (LLM) or other generative AI system produces outputs that appear plausible and coherent but do not accurately reflect reality or the system's intended purpose.

Hallucinations in large language models (LLMs) stem from various superficial issues like modeling problems or prompting errors. However, the fundamental cause is that the current language modeling systems are inherently hallucination functions. Let's delve into this.

Generative AI models, including LLMs, generate outputs by capturing statistical patterns in their training data. Instead of storing explicit factual information, these models implicitly encode data as statistical correlations between words and phrases. Consequently, they lack a clear, well-defined understanding of truth or falsehood, merely producing text that sounds plausible.

This approach generally works because generating plausible text has a high probability of reproducing accurate information, assuming the training data is predominantly truthful. However, LLMs are trained on vast amounts of text data from the internet, which includes inaccuracies, biases, and opinionated/modified information. Consequently, while these models have encountered many true sentences and learned correlations that tend to produce accurate statements, they've also seen numerous variants that are slightly or entirely incorrect.

The biggest reason hallucinations occur is the lack of grounding in authoritative knowledge sources. Without a strong foundation in verified, factual information, the models struggle to differentiate between truth and falsehood, leading to hallucinated outputs. However, this is not the only issue. Even if the training data consisted solely of factual information—assuming there is enough high-quality data available—the statistical nature of language models inherently makes them prone to hallucinations.

A good example of this in agentic behaviour could be seen when Cognition Labs released Devin, an agent developed to replace the average software engineer. Devin was given a task to create inference endpoints on EC2 in AWS for a computer vision model but during one of the initial steps while assessing the repository, Devin tried to access a file that did not exist. A good reason why this could have happened is that most other instances in data on which Devin was fine-tuned or the underlying model was trained on might have required a similar file to be accessed. This would have led Devin to try to access such a file or find a similar file hinting an issue in its thought process.

This highlights a key issue with LLM agents because at the end of the day they’re just great next token predictors and thus their reliability can be sloppy at times. While this is a manageable issue with information generation, deploying this in the field can mean wrong actions being taken and company money being lost. Thus, an important part of agent development work must go towards solving for hallucinations and incorporating human feedback whenever needed.

However, one of the key things Devin also implemented well was that the creators created a backtracking algorithm where Devin could trace back to the point it made an error in and go down an alternative evaluation or next best route to rectify its mistake.

Tying agents with an evaluation system where they can determine the accuracy of the step performed, the progress towards goal and monitor their learning ability will be important areas of research as the future of agents unfolds.

GUI Agents

Purely language-based agents face significant limitations in real-world scenarios because they primarily interact with humans through Graphical User Interfaces (GUIs). These challenges include:

• The absence of standardized APIs for interaction.

• The difficulty of conveying critical information such as icons, images, diagrams, and spatial relationships using only text.

• The inability to interpret functional elements like canvas and iframes in text-rendered GUIs, such as web pages, purely through HTML.

Visual Language Models (VLMs) offer a promising solution to these issues. Instead of depending solely on textual inputs like HTML or OCR results, VLM-based agents can directly process and understand visual signals from GUIs. They can simultaneously handle images, videos, and text, providing a richer understanding of the content by leveraging the complementary information from both modalities. VLMs can better grasp the context of a scene or document, making them more effective in GUI interaction tasks.

Cost

Given that agents run prompts at a cost of $0.03 per 1,000 tokens, with each prompt containing approximately 10,000 tokens, the cost per prompt would be about $0.30. If an agent operates 8 hours a day, 365 days a year, this equates to roughly 2,920 hours annually. Assuming the agent processes one prompt per second, the total yearly cost would be around $3.2ish million, which is significantly higher than the cost of employing a human customer service agent or even a team of them.

The most realistic development timeline for AI agents would be that AI assistants in SaaS and consumer features like Google Docs/Sheets, Gmail is the easiest and the most forward looking integration right now.

Post this, unsupervised or simple workflow automation like the ones Zapier and other workflow unifying companies like Merge/Finch handle will be the next step but will require a huge leap in reliability and cost optimisation.

Any larger and complex workflows would have to build on top of these building blocks.

If you have any feedback or suggestion, do loop it in the comments section. I’ll be sure to take a look at it and even reach out to discuss if possible. You can reach me at avirathtibrewala@gmail.com for any other queries or discussions.

As a part of my newsletter, I wanted to affect some moral good to the society we live in. Every time I publish a post, I’ve decided to share an artefact or cool thing on kindness and benevolence.

If you feel like you’re feeling a bit of imposter syndrome or not belong, watch this clip from Ted Lasso on second chances in life.